Cloud Computing for Data Analysis a Guide

Feeling like you're drowning in data? It's a common problem. Trying to manage it all with traditional, on-premise systems is like trying to fit the entire internet onto a single bookshelf. It’s slow, expensive, and just doesn't scale.

This is where cloud computing for data analysis comes in. Think of it as a massive, instantly accessible digital library with all the most advanced research tools at your fingertips. It gives you the power and speed to turn that data mountain into a real strategic asset.

Why Your Data Strategy Needs the Cloud

For a long time, the only way to do data analysis was in-house. Companies had to buy, manage, and maintain their own servers in private data centers. This gave them a sense of control, but it was also a major headache.

Scaling up meant buying more expensive hardware, a slow-moving process that could never quite keep up with the explosion of data. In today's world, where quick decisions are everything, this old model has become a serious bottleneck.

Moving to the cloud isn't just an IT upgrade; it’s a complete shift in how a business operates. Instead of being boxed in by physical hardware, you get access to virtually unlimited storage and processing power whenever you need it. This kind of agility allows you to experiment, innovate, and react to market shifts faster than ever before.

From Capital Expense to Operational Advantage

One of the biggest game-changers is the financial model. On-premise setups demand huge upfront investments (CapEx) and come with never-ending bills for maintenance, power, and cooling. The cloud flips this completely.

- Pay-as-you-go Model: You only pay for what you actually use. This turns those massive capital outlays into predictable operational expenses (OpEx).

- Reduced Overhead: The cloud provider takes care of all the hardware maintenance, security patches, and facility management. This frees up your IT team to focus on work that actually drives the business forward.

- Instant Scalability: Need a ton of processing power for a big analytics job? You can spin it up in minutes—not months. When you’re done, you can scale right back down and stop paying for resources you no longer need.

This flexibility is why cloud adoption has skyrocketed. Today, about 94% of enterprises use cloud services, and a staggering 60% of all corporate data is already stored there. The market is on track to hit an estimated $1.614 trillion by 2030, driven by the intense demand for platforms that can handle AI and big data. If you're curious about the numbers, you can dig into this report on cloud computing statistics.

The core value is simple: The cloud breaks down the barriers to sophisticated data analysis. Tools and technologies that were once only available to giant corporations are now accessible to businesses of any size, leveling the playing field.

Moving your analytics to the cloud is no longer a choice—it's essential for any company that wants to make sense of its data. It unlocks capabilities that are simply out of reach with older systems. It's also the foundation for platforms like HydraNode, which use cloud infrastructure to build powerful AI-driven tools. The cloud provides the engine to turn raw information into the insights that fuel growth.

Understanding Your Cloud Analytics Toolkit

Image

Image

Jumping into cloud computing for data analysis can feel like stepping into a workshop filled with unfamiliar tools and a language all its own. To get your bearings, let's start with a simple analogy: getting a pizza. Your options range from making it from scratch to having it delivered, and the choice depends entirely on how much of the work you want to handle yourself.

This simple idea perfectly maps to the three main cloud service models. Each one offers a different trade-off between control and convenience. The model you choose will depend on your team's technical skills, what you're trying to analyze, and how deep into the nuts and bolts you want to get. These models are the very foundation of your cloud analytics setup.

The Three Flavors of Cloud Services

Your first big decision is picking a service model. Each one—IaaS, PaaS, and SaaS—determines what the cloud provider manages versus what you manage.

-

Infrastructure as a Service (IaaS): This is the “make-from-scratch” pizza. Your cloud provider hands you the raw ingredients and a professional kitchen: virtual servers, storage, and networking. You have total control to build your environment from the ground up, which is ideal for highly custom or complex data pipelines.

-

Platform as a Service (PaaS): Think of this as a “take-and-bake” pizza kit. The provider manages the underlying servers and operating systems, giving you a pre-built platform complete with databases, development tools, and analytics frameworks. You bring your own data and applications, which dramatically cuts down on development time.

-

Software as a Service (SaaS): This is the pizza delivered right to your door, hot and ready to eat. You just use the software, paying no mind to the platform or infrastructure it runs on. Analytics tools like Google Analytics or Salesforce are classic examples—you log in and start analyzing data instantly.

For most serious data analysis work, you'll likely find yourself working with IaaS and PaaS. They provide the right mix of flexibility and power needed to build tailored, high-performance analytics solutions.

Organizing Your Data: The Data Lake vs. The Data Warehouse

Once you’ve chosen your cloud "kitchen," you need somewhere to put your ingredients—your data. Two concepts are absolutely central to cloud computing for data analysis: the data lake and the data warehouse. It’s important to know they aren't competing technologies; they're complementary systems designed for very different jobs.

A data warehouse is like a perfectly organized pantry. It stores structured, processed data that's been cleaned up and arranged for a specific purpose. This is where you put the data for your financial reports, sales dashboards, and key performance indicators (KPIs). The information is trustworthy, consistent, and ready for quick analysis.

A data lake, however, is like a massive, walk-in refrigerator where you can toss any ingredient in its original packaging. It’s built to hold enormous volumes of raw, unstructured, and semi-structured data—things like raw text, social media feeds, images, and sensor readings—without needing to define its purpose upfront. This makes it the perfect playground for data scientists to explore new ideas and train machine learning models.

By 2025, the cloud will host over 100 zettabytes of data. With that much information, having well-designed data lakes and warehouses is the only way to find meaningful insights without getting completely lost.

These two systems almost always work together. Raw data is collected in the data lake. From there, specific subsets of data are extracted, transformed, and loaded into the data warehouse for structured business reporting. This hybrid strategy gives you the best of both worlds: the raw potential for deep discovery and the refined data needed for day-to-day decisions.

The Business Case for Cloud Data Analysis

Image

Image

Moving your data analysis to the cloud isn't just a technical tweak—it's a strategic move with real, bottom-line impact. Forget just renting servers; this shift can fundamentally change how your business operates, innovates, and stands out from the competition. By embracing cloud computing for data analysis, you open the door to a whole new level of performance and efficiency.

Let's break down the four key areas where the cloud completely outshines traditional, on-premise systems. These advantages—scalability, cost-efficiency, tool accessibility, and collaboration—build on each other to make a powerful case for making the switch. Each one solves a critical problem that businesses today face when trying to keep up with their ever-growing data.

Achieve Unmatched Elastic Scalability

Imagine trying to catch rainwater with a fixed-size bucket. During a sudden downpour, it overflows, and you lose valuable water. In a drought, the bucket sits mostly empty, a wasted resource. This is exactly what it’s like to rely on on-premise infrastructure.

Cloud platforms, on the other hand, are built on a principle of elasticity. Think of your resources as a container that instantly expands or shrinks to perfectly match the amount of data you need to process at any given moment. This is a game-changer for analytics, where workloads can be incredibly unpredictable.

- Handle Peak Loads: You can easily manage huge influxes of data during busy times—like a retailer on Black Friday or a finance firm closing out the month—with zero slowdowns.

- Eliminate Over-provisioning: You no longer have to buy and maintain hardware for your absolute busiest day of the year. This stops you from paying for expensive servers that sit idle most of the time, cutting waste right out of your budget.

This dynamic scaling means your analytics power always matches your business needs, giving you agility without the handcuffs of physical hardware.

Gain Superior Cost Efficiency

The financial model is one of the most compelling reasons to move to the cloud. Traditional IT demands massive upfront capital investments (CapEx) for servers, storage, and networking gear, not to mention the ongoing operational costs for power, cooling, and maintenance.

The cloud completely flips this model on its head, turning it into a predictable operational expense (OpEx). You're subscribing to a service, not buying an asset. This pay-as-you-go approach delivers some serious financial perks.

By moving to the cloud, you trade large, risky capital investments for variable expenses that align directly with your actual usage. This frees up capital for other core business activities and makes your IT spending more transparent and manageable.

This shift doesn't just improve your cash flow; it makes budgeting for data analytics projects much simpler. You can check out our guide to HydraNode pricing models to see how flexible plans can make advanced tools surprisingly affordable.

Democratize Access to Powerful Tools

Not long ago, advanced analytics, artificial intelligence (AI), and machine learning (ML) were luxuries only available to massive corporations with deep pockets and specialized teams. Building and maintaining the necessary infrastructure was simply too expensive for most companies.

Cloud providers have leveled the playing field. They offer sophisticated, ready-to-use analytics and AI/ML services that any business can access on a subscription basis. This democratization of technology means a small startup can use the same powerful predictive analytics engine as a Fortune 500 company. It gives you the freedom to experiment and innovate without a multi-million dollar upfront investment.

Foster Enhanced Collaboration and Innovation

Data silos are innovation killers. When your data is trapped on different departmental servers, it’s nearly impossible for teams to work together effectively. The cloud breaks down these barriers by creating a single, centralized place for all your data.

This is more important than ever as data volumes continue to explode. By 2025, experts predict that over 100 zettabytes of data will be stored in the cloud. With 47% of this corporate data being sensitive, secure collaboration is non-negotiable.

Cloud platforms provide the robust security and access controls needed to let teams—whether they're across the hall or across the globe—securely work on the same datasets in real-time. This unified environment doesn't just speed up projects; it sparks new ideas and helps build a more agile, data-driven culture. To get a better sense of this explosive growth, you can explore more detailed cloud computing statistics.

Choosing Your Cloud Data Architecture

Once you have your cloud toolkit, the next big question is how to build with it. Selecting a data architecture isn't just a technical detail; it’s about laying the right foundation for your business goals. Are you trying to power real-time dashboards or train sophisticated machine learning models? The blueprint you choose has to match the job.

Let's walk through the most common and effective architectural patterns for cloud computing for data analysis. Each one offers a different way to organize and manage information, turning raw data into the intelligence that drives decisions.

The Classic Data Warehouse

Think of a traditional data warehouse as a perfectly organized research library. Every piece of information—mostly structured data like sales figures or customer records—has been carefully cleaned, categorized, and put in a specific spot where it's easy to find. This meticulous organization makes it the ideal setup for business intelligence (BI) and reporting.

When your team needs to pull together a consistent financial report or track key performance indicators (KPIs) on a dashboard, the data warehouse is your best friend. Its main job is to provide a single, reliable source of truth for critical business metrics, ensuring everyone is working from the same high-quality, pre-processed data.

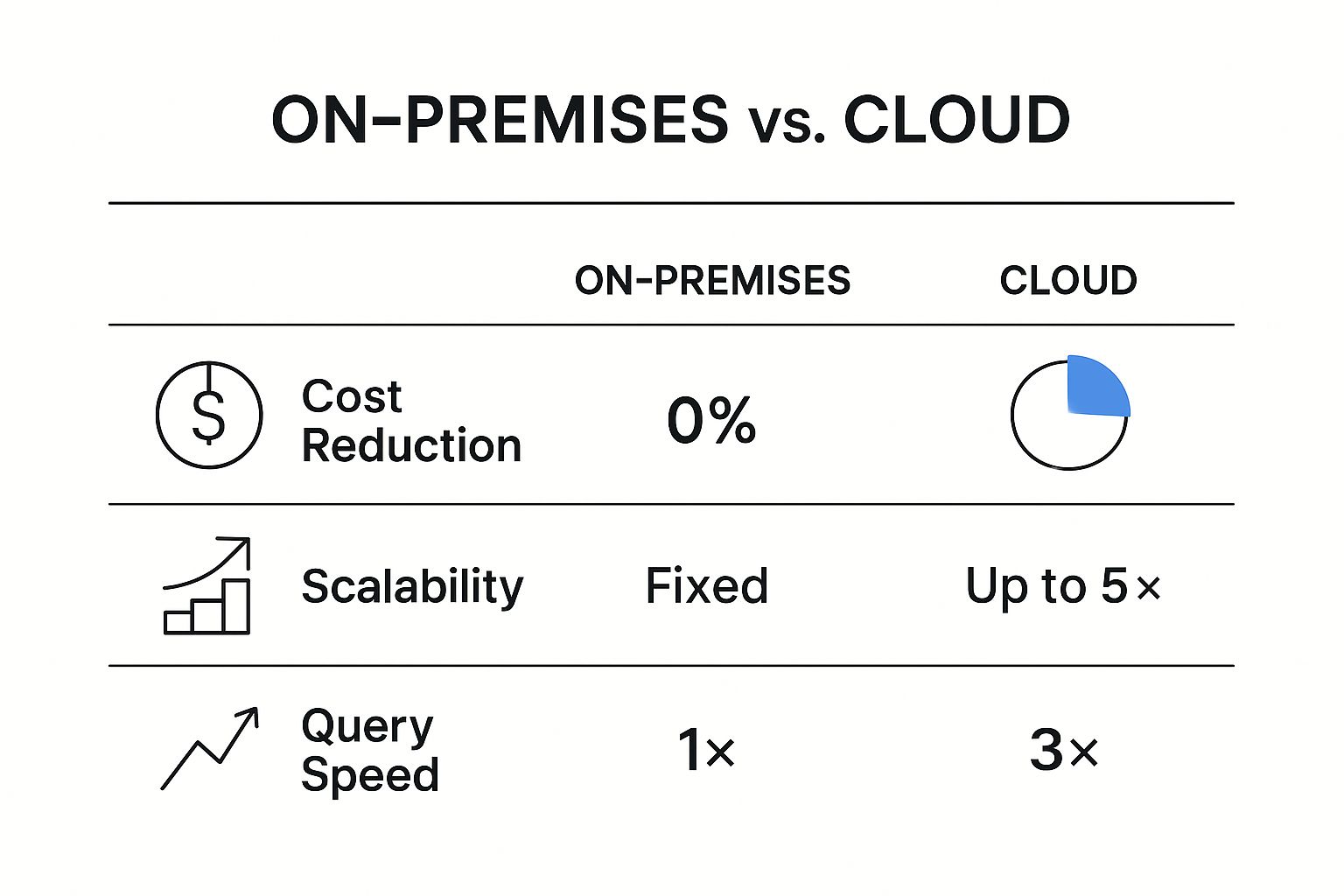

The move from on-premise systems to the cloud has made these warehouses even more powerful. Just look at the performance and cost differences.

Image

Image

The numbers don't lie. Shifting to a cloud-based warehouse can slash costs by almost half and make queries run three times faster. That’s a massive efficiency gain.

The Modern Data Lake

On the other end of the spectrum is the data lake. Imagine this as a massive, raw archive—a place to dump every document, image, and recording you can get your hands on. It’s built to hold enormous volumes of raw, unstructured data, from social media posts and IoT sensor readings to system logs and videos, all without needing a predefined structure.

This incredible flexibility is exactly what data scientists and machine learning engineers need. They can dive into the raw data, test out new ideas, and build predictive models without being boxed in by a rigid schema. If you're doing exploratory analysis or developing advanced AI, the data lake is your playground.

A data lake offers immense potential for discovery. By storing everything in its native format, you create an environment where future analytical questions, even ones you haven't thought of yet, can be answered.

The Best of Both Worlds: The Lakehouse

For a long time, businesses felt they had to choose: the structured reliability of a warehouse or the flexible potential of a lake. The Lakehouse architecture was created to end that dilemma by merging the two ideas into one unified platform.

A lakehouse combines the cheap, scalable storage of a data lake with the rock-solid data management and transaction features of a data warehouse. This means you can keep all your data—structured, semi-structured, and unstructured—in one location while still applying proper governance, quality controls, and ACID transactions directly on the raw files. This hybrid approach lets you run both BI reports and machine learning projects from a single system, dramatically simplifying your data stack.

The Strategic Hybrid Cloud Model

Finally, a hybrid cloud architecture offers a strategic middle path, blending your private, on-premise infrastructure with public cloud services. This model is a great fit for companies that have to balance the need for scale with strict security, compliance, or data sovereignty rules. A bank, for example, might keep its most sensitive customer data on its own private servers but use the public cloud's immense processing power to run its fraud detection algorithms.

This approach is picking up serious momentum as companies look to get the most out of their IT investments. The global hybrid cloud market, valued at $130.87 billion in 2024, is expected to swell to $329.72 billion by 2030. This growth is fueled by the demand for secure and compliant AI-powered data analysis. You can discover more about the rise of hybrid cloud analytics in recent market analyses.

Choosing the right architecture is a foundational step. To help clarify the differences, here’s a quick comparison of the patterns we've discussed.

Cloud Architecture Patterns Compared

| Pattern | Primary Use Case | Data Structure | Key Advantage |

|---|---|---|---|

| Data Warehouse | Business Intelligence & Reporting | Highly Structured, Pre-processed | Query Speed & Data Reliability |

| Data Lake | AI/ML & Exploratory Analysis | Raw (Unstructured & Semi-structured) | Flexibility & Scalability for Big Data |

| Lakehouse | Unified BI & AI/ML | Mixed (Structured & Unstructured) | Single Platform for All Data Workloads |

| Hybrid Cloud | Regulated Industries & Workload Optimization | Varies based on location (on-prem/cloud) | Balances Security, Compliance & Scale |

Each of these patterns serves a distinct purpose. The key is to match the architecture to your specific data strategy and business needs, ensuring you have a robust, scalable, and cost-effective foundation for all your analytical efforts.

How Industries Win with Cloud Analytics

Image

Image

The real magic of cloud computing for data analysis happens when you see it applied in the real world. While the theory and architecture patterns are essential building blocks, it's the success stories that truly show how powerful this technology can be. Across every sector imaginable, companies are using the cloud to finally solve old problems and discover entirely new opportunities.

These aren't just small, incremental tweaks. We're talking about fundamental shifts in how businesses operate, compete, and connect with their customers. Let’s look at how four key industries are turning cloud-based data into a serious competitive edge, focusing on the specific problems they faced, the solutions they built, and the incredible results they achieved.

Retail Revolutionizes the Customer Experience

For any retailer, predicting what customers will want to buy is a constant, high-stakes challenge. If you overstock, you’re stuck with wasted capital and deep discounts. If you understock, you miss out on sales and leave shoppers disappointed. A major clothing brand was caught in this very trap, finding that their historical sales data just wasn't cutting it for predicting fast-moving fashion trends.

Their solution? They built a demand forecasting platform on a public cloud. By creating a central data lake, they could finally pull in a much richer mix of information beyond simple sales figures. This included:

- Social media sentiment to catch emerging trends as they happened.

- Real-time website traffic and clickstream data to see which items were getting the most attention.

- Regional weather forecasts to better predict demand for seasonal items like coats or swimwear.

Using the cloud’s built-in machine learning services, they developed models that crunched all this diverse data to generate remarkably accurate sales forecasts. The cloud's elastic nature was key here, letting them ramp up processing power during peak seasons without a hitch. The payoff was huge: a 25% reduction in overstock and a 15% boost in sales for items they forecasted correctly.

Healthcare Predicts and Prevents Disease

In the world of healthcare, the ability to analyze patient data can quite literally be a matter of life and death. The problem is that this critical information is often fragmented, locked away in separate, incompatible systems. A large hospital network set an ambitious goal: to shift from simply treating diseases to proactively preventing them by identifying patients at high risk for chronic conditions like diabetes.

To do this securely, they chose a hybrid cloud architecture. This approach gave them the best of both worlds.

By keeping sensitive patient records on their secure, on-premise servers but using the public cloud for large-scale, anonymized data analysis, they found the perfect blend of security and analytical power.

They used cloud analytics tools to process years of anonymized electronic health records (EHRs), lab results, and genomic data. Machine learning algorithms were then trained to spot subtle patterns and risk factors that would be completely invisible to a human. Today, these models give doctors a risk score for each patient, flagging those who would benefit most from early interventions. This program has already led to a 30% improvement in early detection rates for at-risk individuals. To see the kind of people driving these innovations, you can learn more about the team at HydraNode and their work.

Finance Fights Fraud in Real Time

Financial institutions are locked in a perpetual arms race with fraudsters. Traditional fraud detection systems, which often ran in batches overnight, were simply too slow to catch sophisticated scams as they unfolded.

A leading financial services company tackled this by moving its fraud detection system to a cloud platform built for real-time stream processing. Now, every single transaction—from a credit card swipe to a wire transfer—is analyzed the moment it happens. The system instantly compares each event against complex fraud patterns and a customer's known behavior.

If a transaction looks suspicious, the system can block it automatically and trigger an immediate alert to both the customer and the fraud team. This practical application of cloud computing for data analysis has slashed successful fraudulent transactions by over 60%, saving the company millions and giving customers peace of mind.

Manufacturing Enables Predictive Maintenance

On a factory floor, a single unexpected equipment failure can halt the entire production line, racking up costs of thousands of dollars per minute. A global automotive manufacturer knew they had to get ahead of these breakdowns.

Their approach was to install thousands of Internet of Things (IoT) sensors on their assembly line machinery. These sensors constantly stream data—like temperature, vibration, and energy usage—to a central cloud IoT platform.

This information is fed into specialized machine learning models trained to recognize the tell-tale signs of impending failure. When the system detects an anomaly, it automatically generates a maintenance ticket for that specific machine, allowing technicians to schedule repairs before a catastrophic failure occurs. This predictive maintenance program has cut unplanned downtime by an impressive 40% and significantly extended the life of their expensive equipment.

Here is the rewritten section, designed to sound like an experienced human expert.

Building Your Cloud Data Roadmap

Jumping into cloud analytics without a clear plan is a recipe for disaster. It’s a bit like trying to build a house without a blueprint—you might end up with something standing, but it won’t be the sturdy, functional home you envisioned. A well-thought-out roadmap is what turns a pile of powerful technologies into a solution that actually moves your business forward.

Think of the following steps as your guide. This isn't just about theory; it's a practical action plan for getting your cloud data initiative off the ground or fine-tuning an existing one. By following these best practices, you can sidestep common headaches and start seeing a real return on your investment much faster.

Start with Business Goals, Not Technology

I’ve seen this mistake countless times: a team gets excited about a shiny new tool before they’ve even defined the problem they're trying to solve. The first question should never be, "Which cloud service do we need?" It should always be, "What business outcome are we trying to drive?"

Are you looking to pinpoint why customers are leaving? Or maybe you need to untangle a complex supply chain to find efficiencies. Perhaps you want to get new products to market faster. Your answer to that question is what will truly guide your technical choices, from the data you collect to the analytical models you build.

A successful cloud data strategy is always a business strategy first. The technology is simply the means to an end, not the end itself. Let your goals drive your technical decisions.

Choose the Right Cloud Provider

Once you have your business objectives locked down, it’s time to look at the major players: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). At a high level, they offer similar services, but the devil is in the details, and each has its own distinct personality.

- AWS is the market leader with the most extensive set of tools. It's a mature, go-to option that can handle almost anything you throw at it.

- Azure shines in the corporate world, especially if you're already living in the Microsoft ecosystem. Its integration with tools like Office 365 and Active Directory is incredibly smooth.

- GCP has built a strong reputation in data analytics and machine learning. If your work involves heavy number-crunching or modern container-based applications with Kubernetes, it’s a serious contender.

Weigh them against your specific project requirements, your team's current skillset, and their pricing structures to see which one makes the most sense for you.

Master Data Governance and Security

Moving sensitive data to the cloud means that governance and security have to be top-of-mind from the very beginning, not an afterthought. You need to establish crystal-clear rules for who can access what data, when, and why. This includes everything from ensuring data quality to meeting compliance standards.

Remember the "shared responsibility model." Your cloud provider handles the security of the cloud (the physical data centers, the hardware), but you are responsible for security in the cloud. That's on you. This means diligently using encryption, managing user access, and constantly keeping an eye out for potential threats.

Manage Costs with FinOps Principles

Cloud bills can get out of hand quickly if you're not paying attention. That's where FinOps comes in. It’s not just a tool, but a cultural mindset that brings financial discipline to the flexible, pay-as-you-go nature of the cloud. In practice, this means:

- Monitoring your spending in near real-time so there are no surprises.

- Optimizing your setup by rightsizing resources and shutting down anything that’s sitting idle.

- Forecasting future expenses to keep your budget on track.

Foster a Data-Driven Culture

Finally, and this might be the most important point, the best technology in the world is useless without the right people and culture to support it. A successful cloud analytics program is about more than just a platform; it's about fundamentally changing how your organization makes decisions.

You have to invest in training your people. Give them the skills and the access they need to explore data and find their own insights. Work to break down the silos that keep valuable information locked away in separate departments. When everyone in the organization starts speaking the language of data, that’s when you truly unlock the power of your cloud investment.

Common Questions About Cloud Data Analysis

https://www.youtube.com/embed/RxXdMs34lik

Moving your data analysis to the cloud is a big step, and it's natural to have questions. Let's walk through some of the most common concerns to give you a clearer picture.

How Secure Is My Data, Really?

This is usually the first question on everyone's mind. The short answer is: cloud security is a partnership. It works on what's called a shared responsibility model. Think of it like a secure storage facility. The company provides the tough building, the guards, and the surveillance cameras—that’s the cloud provider securing the physical infrastructure.

But you're still the one who holds the key to your specific unit. You're responsible for deciding who gets a key (access controls), what's inside the boxes (data encryption), and keeping an eye out for anything suspicious. The good news is that major cloud platforms offer security tools so advanced they often blow away what most individual companies could realistically build and maintain on their own.

Which Cloud Provider Is Best?

There’s no single "best" provider—it’s all about finding the best fit for you. The big three players, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), each have their own strengths.

- AWS is the longtime market leader and has the most comprehensive collection of services and tools. It's a go-to for many because of its maturity and massive community.

- Azure shines in the corporate world, especially for businesses already heavily invested in Microsoft products. Its integration with things like Office 365 and Active Directory is seamless.

- Google Cloud has built a reputation for its powerful capabilities in data analytics, machine learning, and AI. If your work is heavily focused on these areas, GCP is a very strong contender.

The right choice really boils down to your team's existing skills, your current technology, and what you're trying to achieve.

The biggest migration challenges often stem not from technology, but from people and processes. Key hurdles include managing unexpected costs, overcoming internal skill gaps, and handling the sheer complexity of moving legacy systems. A phased approach is crucial.

Ready to master your IT certifications without the guesswork? HydraNode delivers AI-generated practice exams that adapt to your knowledge gaps, helping you study smarter and pass faster. Get started with our advanced exam preparation platform.